Tesla Releases FSD V13.2: Adds Ability to Reverse, Start FSD from Park, Autopark at Destination and Much More

Last night Tesla finally launched FSD V13.2 with a bevy of new features for its early access testers with update 2024.39.10. While they barely missed Thanksgiving's floaty deadline, they still managed to deliver it in November, marking another big win for the Tesla AI team.

Early Access Only

FSD V13.2 started to roll out to early access testers - who generally get hands-on with the latest builds in advance of everyone else. They’re the equivalent of Tesla’s trusted testers who aren’t running internal builds - and they’re able to catch more scenarios outside of Tesla’s pretty extensive safety training suite.

If no major issues are spotted, Tesla will begin a slow rollout to more and more vehicles over the next few weeks. Assuming all goes well with this build, it could be in most customer’s hands by Christmas.

Of course, as a reminder, FSD V13 is still limited to vehicles equipped with AI4—and for now, anything but the Cybertruck. The Cybertruck is on its own FSD branch, without access to Actually Smart Summon and Speed Profiles, but with End to End on the Highway. The Cybertruck was recently upgraded to update 2024.39.5 (FSD V12.5.5.3).

FSD V13.2 Features

Let’s take a look at everything in FSD V13.2 - which is the build version going out now on Tesla software update 2024.39.10. While we previously got a short preview of what was expected with V13, we now can see everything included in FSD V13.2.

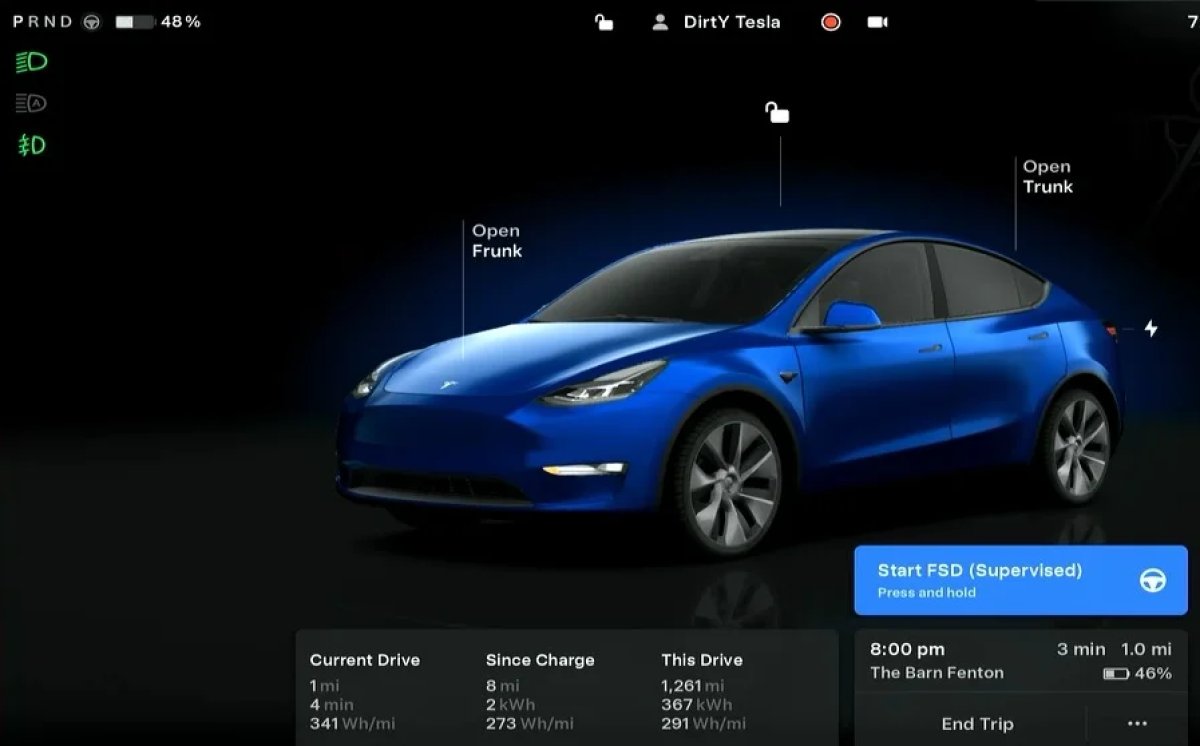

Start FSD from Park, Reverse & Park at Destination

Parked to Parked has been the goal for FSD for quite a while now. Elon Musk has been saying that it was going to be the key to demonstrating Tesla’s autonomy framework back with the release of V12.3.6 - when V12.5 was but a glimmer in the Tesla AI team’s eye.

Now, with V13, FSD has integrated three key functionalities.

Unpark: FSD can now be started while you’re still parked. Simply set your destination and tap and hold the new Start FSD button. The car will now shift out of park and into drive or reverse in order to get to its destination.

Reverse: FSD has finally gained the ability to shift. Not only can the vehicle go into reverse now, but it can seamlessly shift between Park, Drive and Reverse all by itself. It can be perform 3-point turns.

Park: When FSD reaches its destination, it will now park itself if it finds an open parking spot near the final location. Tesla says that further improvements are coming to this, and drivers will be able to pick between pulling over, parking in a parking spot, driveway or garage in the future.

If everything goes smoothly on a drive, users will no longer need to give the vehicle any input at all, from its original location to its final parking spot. No more user intervention other than supervision is needed, unless an intervention is needed.

I tried to get FSD 13.2 to park in my garage but it instead did a 3 point turn and tried to escape 😱 pic.twitter.com/EzEYcNvuuA

— Dirty Tesla (@DirtyTesLa) December 1, 2024

Full Resolution AI4 Video Input

Until now, FSD V12.5 and V12.6 have been using reduced image quality at reduced framerates to match the lower resolution and lower refresh rate provided by Hardware 3 cameras. For the first time, FSD will be using AI4’s (previously known as Hardware 4) cameras at higher resolution and 36 frames per second.

In short, that means better image quality for both training and in use and higher accuracy for things like signage and distance measurement.

Speed Profiles for All Roads

FSD V12.5.6.2 brought new and improved Speed Profiles to both city streets and highways, including the new Hurry Mode, which replaced Assertive Mode. However, on V12.5.6.2, there were a few limitations - roads needed a fairly high minimum speed limit of 50mph (80km/h) or higher. Now, that’s no more. City Streets has speed profiles for all speed limits now.

FSD 13 navigates parking lot then BACKS ITSELF INTO SUPERCHARGER STALL pic.twitter.com/f6vAeJ0mSe

— ΛI DRIVR (@AIDRIVR) December 1, 2024

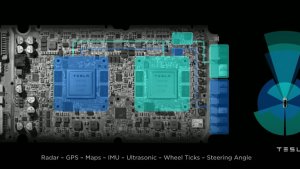

Native AI4 Inputs and Neural Network Architecture

Similar to the video resolution and refresh rate, AI4 has a lot of new hardware features that help optimize how fast FSD’s AI model can run. We dug into how Tesla’s Universal Translator streamlines FSD for each platform - this is a case of having fewer constraints and more optimization versus Hardware 3.

5x Training Compute

Cortex, Tesla’s massive new supercomputer cluster at Giga Texas, is now online and crunching data at a truly insane rate. It's one of the fastest AI clusters in the world—and it's dedicated to FSD. Tesla has 5x the training compute crunching away to solve the March of 9’s now that FSD is close to being feature complete.

Faster Decision Making

Tesla refactored how it handles image-to-processing in FSD V13 - another huge set of changes to improve performance. In this release - a 2x faster photon-to-control latency, which is massive. In layman’s terms - that’s faster decision-making - it was already faster than a human, and now it's twice as fast as it was before.

Insane product push on the week of my birthday.

— Yun-Ta Tsai (@YunTaTsai1) November 30, 2024

We refactored the entire system to drastically simplify the pipeline — direct photons to control — yet providing a lot more functionality under the same unify framework.

It is probably one of the biggest rewrite in years when we… https://t.co/M0pr2KzcHv

Collection Data for Audio Input

One of the features Tesla lists in FSD V13.2 is the ability for the vehicle to collect and share audio snippets with Tesla. The vehicle will ask you whether you’re okay with sharing 10-second audio files with Tesla so that the vehicle can detect emergency vehicles by sound in the future.

Camera Visibility Detection

The vehicle will now prompt you at the end of a drive if visibility issues are detected. The new option is under Controls > Service > Camera Visibility. Tesla will also retain images from the cameras when the vehicle experiences visibility issues during a drive so that you can analyze them later.

As of FSD V12.5.6.2, your Tesla will warn you when it needs cleaning - and guide you to help clean the cameras too. This, along with less annoying notifications that FSD is degraded, are going to be fantastic changes for those who aren’t driving around in sunny weather.

Better Collision Avoidance

Due to all the changes to the AI model in V13, it also brought along with it changes to how the AI perceives and handles collision avoidance.

FSD has already earned a reputation for cleanly avoiding T-bone collisions in red light incidents, but it's going to get even better from here on out.

FSD 13 leaves parking lot (+ awkward interaction with other driver)

— ΛI DRIVR (@AIDRIVR) December 1, 2024

the smoothness is absolutely INSANE

it also saw the Model 3 backing up before I did, I was wondering why it wasn’t moving lol pic.twitter.com/MhKgVI2WX1

Vehicle to Fleet Communication

One of the features V12.5 was supposed to bring was fleet-based dynamic routing. If a route was closed, your Tesla would turn around and navigate through an alternative path - and also warn the rest of the fleet of the closure.

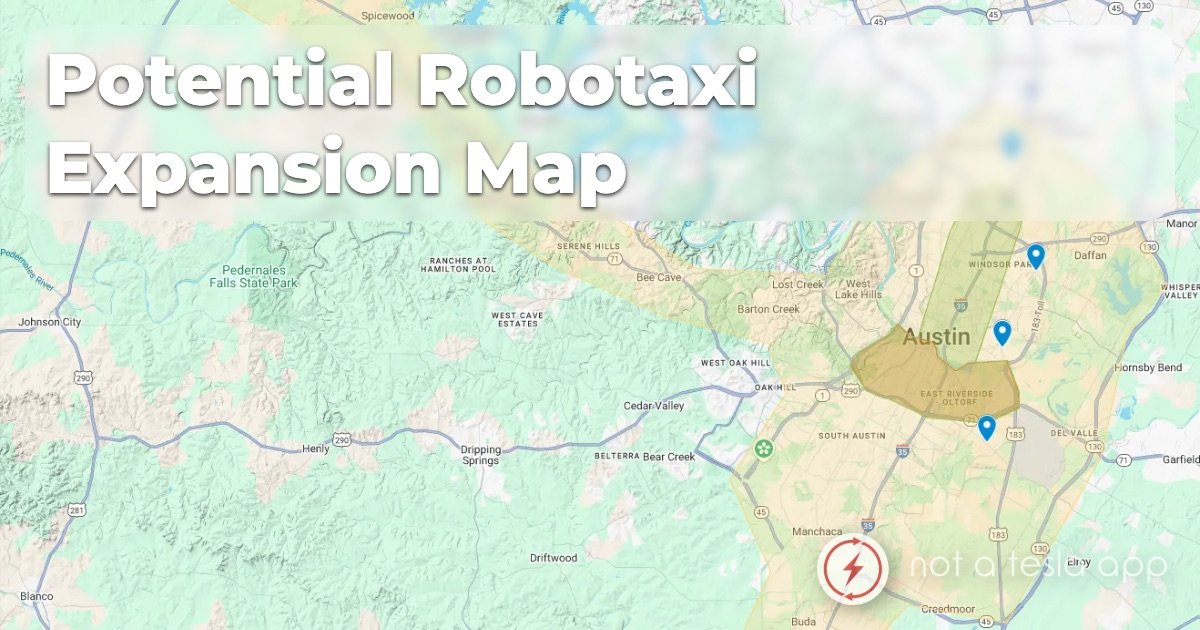

V13 lets AI4 vehicles do this, and it's another element of the Robotaxi network that Tesla needs to get off the ground to ensure that once they do begin to deploy their first fleets - they function well. So far, with new job postings for Robotaxi Engineers and talks with Palo Alto to launch a Robotaxi service, things are on track for both Unsupervised FSD and Robotaxi sometime in 2025.

Better Traffic Controller

Another big update is a redesigned traffic controller - which makes for smoother and more accurate tracking of other vehicles and objects around the vehicle. We dug into how the traffic controller processes information in this article here, where you can learn all about how Tesla’s signal processing works.

Here is highlight clip #2 from my FSD Supervised v13.2 Night First Impressions Drive. Enjoy the clips! @Tesla_AI pic.twitter.com/bLOjM0bVYi

— Chuck Cook (@chazman) December 1, 2024

Upcoming Improvements

Tesla has mentioned a lot of upcoming improvements panel for FSD V13 too, which includes bigger models, audio inputs, better navigation and routing, improvements to false braking, destination options, and better camera occlusion handling. That’s a pretty big list for V13, so we’ll keep an eye on all these upcoming features that are expected in a future release.

What About Hardware 3?

Tesla’s previous roadmap update didn’t mention HW3 getting FSD V13. Instead, those of us on Hardware 3 will need to keep waiting and looking for Tesla to optimize another FSD Model - until then, you’ll be on FSD V12.5.4.2, which is still a fairly capable build.

Tesla has mentioned that they could potentially upgrade HW3 computers - not cameras - if engineers aren’t able to get FSD Unsupervised working on HW3. While there isn’t a lot to share here yet, it certainly looks like HW3 owners will be receiving some sort of free hardware upgrade in the future, but it’s not clear yet when or what they will be.

Keep an eye out in the new year for updates on what’s coming next with HW3. We hope to see an optimized V13 build eventually make its way to HW3 sometime in the future - Tesla has been working pretty hard on this, so let’s give them some time.

Release Date

For everyone who’s been patiently waiting to see more of FSD V13 since the sneaky reveal at We, Robot, you’ll be waiting a bit longer. This build is currently going out to early access testers, who serve as a critical step in Tesla’s safety verification process.

Once Tesla is comfortable with the rate of disengagement, Tesla will evaluate their results, make any final changes, and then begin rolling it out in waves. Fingers crossed, wider waves for V13 will make their way to AI4 S3XY vehicles and the Cybertruck by Christmas.

_300w.png)