What’s Coming in Tesla FSD V13

As part of an update to its AI roadmap, Tesla has also announced the features that will be in FSD v13. Tesla provided many details about what we can expect, and there’s a lot of info to break down.

Tesla’s VP of AI, Ashok Elluswamy, also revealed that FSD v13 is expected to make FSD Unsupervised feature complete. That doesn’t mean that autonomy will be ready, as each feature will still need to work at safety levels higher than a human, but it means every key feature of autonomous vehicles will be present in FSD v13.

Let’s examine the v13 feature list Tesla and Tesla employees have recently provided to see exactly what’s coming.

Higher Resolution Video & Native AI4

FSD v12 has been trained using Tesla’s HW3 cameras and downsampling the AI4 cameras to match. For the first time, Tesla will use AI4's native camera resolution to get the clearest image possible. Not only will Tesla increase the resolution, but they’re also increasing the capture rate to 36 FPS (frames per second). This should result in extreme smoothness and the ability of the vehicle to detect objects earlier and more precisely. It’ll be a big boon for FSD, but it’ll come at the price of processing all of this additional information.

The HW3 cameras have a resolution of about 1.2 megapixels, while the AI4 cameras have a resolution of 5.44 megapixels. That’s a 4.5x improvement in raw resolution - which is a lot of new data for the inference computer and AI models to deal with.

Yun-Ti Tsai, Senior Staff Engineer at Tesla AI, mentioned on X that the total data bandwidth is 1.3 gigapixels per second, running at 36 hertz, with nearly 0 latency between capture and inference. This is one of the baseline features for getting v13 off the ground, and through this feature update, we can expect better vehicle performance, sign reading, and lots of little upgrades.

Bigger Models, Bigger Context, Better Data

The next big item is that Tesla will increase the size of the FSD model by three times and the overall context length by the same amount. What that means, in simple terms, is that FSD will have a lot more information to draw upon—both at the moment (the context length) and from background knowledge and training (model size).

In layman’s terms, Tesla has made the FSD brain bigger and increased the amount of information it can remember. This means that FSD will have a lot more data to work with when making decisions, both from what's happening right now and from what it has learned in the past.

Beyond that, Tesla has also massively expanded the data scaling and training compute to match. Tesla is increasing the amount of training data by 4.2 times and increasing their training commute power by 5x.

Video of the inside of Cortex today, the giant new AI training supercluster being built at Tesla HQ in Austin to solve real-world AI pic.twitter.com/DwJVUWUrb5

— Elon Musk (@elonmusk) August 26, 2024

Audio Intake

Tesla’s FSD has famously only relied upon visual data—equivalent to what humans can access. LiDAR hasn’t been on Tesla’s books except for model validation, and radar, while used in the past, was mostly phased out.

Now, Tesla AI will integrate audio intake into FSD’s models, with a focus on better handling of emergency vehicles. FSD will soon be able to react to emergency vehicles, even before it sees them. This is big news and is in line with how Tesla has been approaching FSD—through a very human-like lens.

We’re excited to see how these updates pan out - but there was one more thing. Ashok Elluswamy, VP of AI at Tesla, confirmed on X that they’ll add the ability for FSD to honk the horn.

Other Improvements

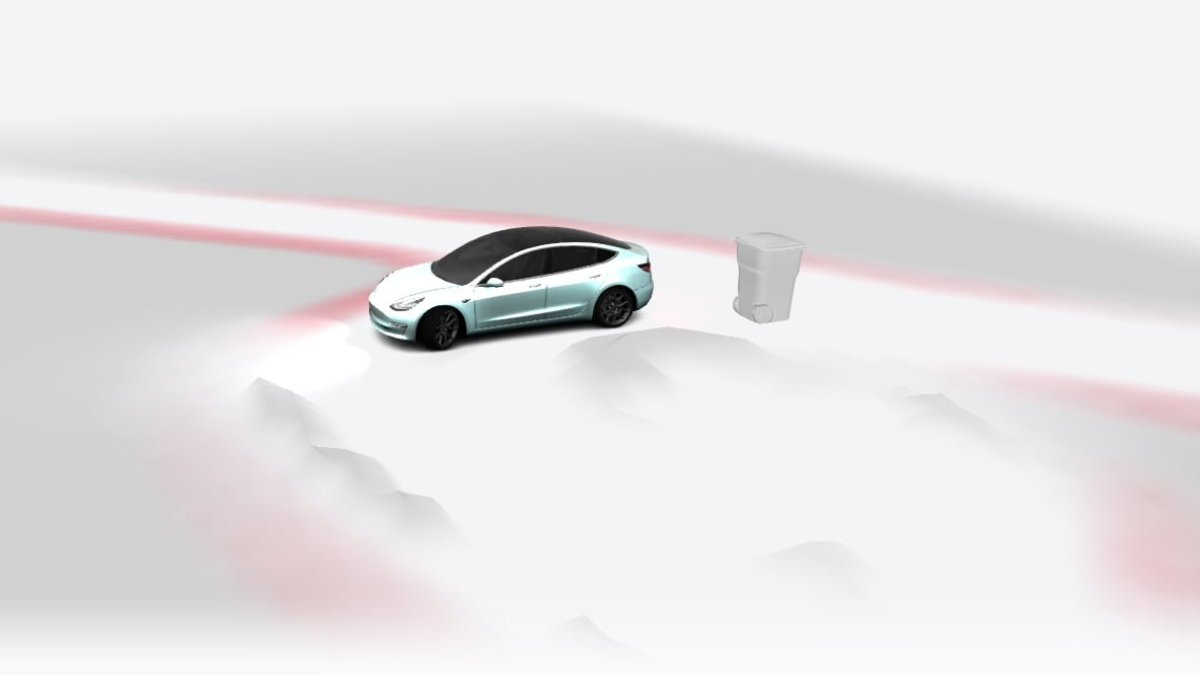

The other improvements, while major, can be summarized pretty simply. Tesla is focusing on improving smoothness and safety in various ways. The v13 AI will be trained to predict and adapt for collision avoidance, navigation, and better following traffic controls. This will make it more predictable for users and other drivers and improve general safety.

Beyond that, Tesla is also working on a better representation of the map and navigation inputs versus what FSD actually does. In complex situations, FSD may choose to take a different turn or exit, even if navigation is telling it to go the other way. This future update will likely close this gap and ensure that your route and FSD’s path planner match closely.

Of course, Tesla will also be working on adding Unpark, Reverse, and Park capabilities, as well as support for destination options, including parking in a spot, driveway, or garage or just pulling over at a specific point, like at an entrance.

Finally, they’re also working on adding improved camera self-cleaning and better handling of camera occlusion. Currently, FSD can and will clean the front cameras if they are obscured with debris, but only if they are fully blocked. Partial blockages do not trigger the wipers. Additionally, when the B-Pillar cameras are blinded by sunlight, FSD tends to have difficulties staying centered in the lane. This specific update is expected to address both of these issues.

FSD V13 Release Date

Tesla announced that FSD v13 will be released to employees this week, however, it’ll take various iterations before it’s released to the public. Tesla mentioned that they expect FSD v13 to be released to customers around v13.3, but surprisingly, they state that this will happen around the Thanksgiving timeframe — just a few weeks away.

Tesla is known for delays with its FSD releases, so we’re cautious about the late November timeline. However, the real takeaway is that FSD v13 is expected to offer a substantial leap in capability over the next few months—even if it’s exclusive to AI4.

![First Recorded Tesla Robotaxi Intervention: UPS Truck Encounter [VIDEO]](https://www.notateslaapp.com/img/containers/article_images/2025/robotaxi_model_y.jpg/3036d8b53214c2d071c978c42911e451/robotaxi_model_y.jpg)

![First Look at Tesla's Robotaxi App and Its Features [PHOTOS]](https://www.notateslaapp.com/img/containers/article_images/tesla-app/robotaxi-app/robotaxi-app-tips.webp/e643a61975bf712d18dc9aa9a48fdb01/robotaxi-app-tips.jpg)

![Tesla’s New Camera Cleaning Feature and the Future of Cleaning Robotaxis [VIDEO]](https://www.notateslaapp.com/img/containers/article_images/2024/windshield-rain-droplets.jpg/8335f3020509dab3cdb56c1a91d054a9/windshield-rain-droplets.jpg)

![Tesla Launches Robotaxi: Features, Robotaxi App, Command Center and First Impressions [VIDEO]](https://www.notateslaapp.com/img/containers/article_images/2025/robotaxi_rear_screen.jpg/bf5ea088bdebda8ca7e0aa1503b69f4e/robotaxi_rear_screen.jpg)

_300w.png)