Tesla FSD Beta v11.3 Improvements Explained in Plain English

Finding a more anticipated Tesla update would be hard than FSD Beta v11.3. We had been waiting for it since AI Day on September 30, 2022. Elon Musk has also done a great job teasing Tesla owners with updates and timelines. The update is now being tested by Tesla employees, leading to some leaks and our first peek into the update. However, Musk has already stated that it will be v11.3.2 that goes to the broader subscriber base, leading us to believe that there will be plenty of tweaks to make after the initial rollout. That said, there is a lot to go through with the latest release notes.

Thanks to Dr. Know-it-all's excellent video (below), here is the breakdown and explanation of what we can expect to see when the newest update beams into Teslas in the United States and Canada.

Single Stack Sensation

Enabled FSD Beta on highway. This unifies the vision and planning stack on and off-highway and replaces the legacy highway stack, which is over four years old...

The single stack is here. In computer architecture and tech terminology, a tech stack is the technologies and systems used for a given system. In this case, Tesla combines vision and planning stack on and off the highway. According to Tesla, the legacy highway stack, which is four years old, relies on several single-camera and single-frame networks and was set up to handle simple lane-specific maneuvers.

By simple maneuvers, the company refers to merging on and off the highway and changing lanes. However, FSD is now able to do so much more. Although Tesla has made considerable strides in the past four years, the latest FSD Beta uses "multi-camera video networks and next-gen planner, that allows for more complex agent interactions with less reliance on lanes, make way for adding more intelligent behaviors, smoother control and better decision making." In translation, we should see much smoother and less unnatural robotic driving. For example, the Autopilot highway lane change that predictably waited a few seconds before moving over will act much more intuitively with the FSD programming.

Dr. Know-it-all speculates that the legacy stack controlling highway driving had become so reliable that the company had a high bar to beat when rolling out something new. This change needs to be kept in mind for those who have used FSD a lot or for a long time during highway driving. After you are updated, your car will behave slightly differently the next time you merge onto the highway.

Voice Driver Notes

Added voice drive-notes. After an intervention, you can now send Tesla an anonymous voice message describing your experience to help improve Autopilot.

If the driver needs to intervene, this message will appear: "Autopilot Disengaged. What Happened? Press voice button to send Tesla an anonymous message describing your experience."

There used to be a button on the screen that you could tap to provide feedback (and it's still available for early testers); however, all you could do was tap it, and that would signal a negative experience, but there was no way to explain what happened. This new feature should assist Tesla engineers in watching the video and listening to the driver's feedback to understand the situation better. It is expected the audio feedback will be converted to text to keep the driver anonymous and let engineers search and read messages.

Expanded Automatic Emergency Braking (AEB)

Expanded Automatic Emergency Braking (AEB) to handle vehicles that cross ego's path. This includes cases where other vehicles run their red light or turn across ego's path, stealing the right-of-way. Replay of previous collisions of this type suggests that 49% of the events would be mitigated by the new behavior. This improvement is now active in both manual driving and autopilot operation.

AEB has been around since the mid-2000s. The system applies brakes if it detects the vehicle in front is slowing down or may have suddenly hit the brakes. Tesla's expanded version of this system will monitor not just the traffic directly ahead but also the sides (cars running red lights) or anything that is "stealing the right-of-way." The company says that nearly half of the collisions of this nature would be avoided with this newly expanded system. Better yet, this is active in Full-Self Driving and manual operation — just another Tesla safety improvement.

Improved Autopilot Reaction Time

Improved autopilot reaction time to red light runners and stop sign runners by 500ms, by increased reliance on object's instantaneous kinematics along with trajectory estimates.

Tesla has improved its Autopilot reaction by 500 milliseconds or half a second. It doesn't sound like much, but this system mainly calculates how to respond to drivers running stop signs or red lights. For example, let's say you are driving through your neighborhood at 25 miles per hour and approaching an intersection where you have the right of way. Suddenly a car appears, and the collision has already happened when you realize it is not stopping. By calculating an object's instantaneous kinematics along with trajectory estimates, Telsa would respond in just about the same time as a blink of an eye. At 25 mph, your car is moving at 36.6 feet (11 meters) per second. Imagine what an extra 17.3 feet (5.5 metres) would do in this situation. It's likely the difference between a collision and a near crash.

Dr. Know-it-all Explains the Release Notes

Overall Driving Advancements

Improved handling through high speed and high curvature scenarios by offsetting towards inner lane lines.

Another improvement involves offsetting the vehicle towards the inner lane lines during a turn rather than keeping it dead center in the lane. This biasing towards the inside of the arc is a more natural trajectory for drivers and will help them avoid getting too close to vehicles coming from the other direction.

Improved longitudinal control response smoothness when following lead vehicles by better modeling the possible effect of lead vehicles’ brake lights on their future speed profiles.

Tesla's AI team has been working on better modeling the possible effect of lead vehicles' brake lights on their future speed profiles. Previously, the Tesla would ignore the brake lights until it was too late, resulting in an uncomfortable situation where the car would have to brake abruptly to avoid hitting the vehicle in front of it. However, Tesla's new modeling approach will enable it to react sooner and more smoothly to brake lights by predicting the lead vehicle's trajectory and speed. This improvement is not safety-critical but will make users more comfortable and provide a better driving experience.

Improved recall for close-by cut-in cases by 20% by adding 40k autolabeled fleet clips of this scenario to the dataset. Also improved handling of cut-in cases by improved modeling of their motion into ego's lane, leveraging the same for smoother lateral and longitudinal control for cut-in objects.

Recall is all about false negatives, which means the car may overreact to a situation, perhaps slamming on the brakes when someone cuts in front instead of slowing down. This update has improved recall by 20% for close-by cut-in cases, the polite way of saying being cut off. Telsa autolabeled 40,000 clips of this scenario to the dataset, which should reduce false negatives. However, the car will also handle being cut off with more control. If slamming on the brakes is not required, gradual slowing is likely what the Tesla will do.

FSD Can Better Recognize Buses

Improved semantic detections for school busses by 12% and vehicles transitioning from stationary-to-driving by 15%. This was achieved by improving dataset label accuracy and increasing dataset size by 5%.

The upgrade in semantic detection means that the system now understands that a detected object is a school bus, rather than simply identifying it as a large vehicle or something else. This improvement is beneficial because it increases drivers' confidence around school buses, as they require a different driving behavior than most other vehicles on the road. In addition to improving semantic detection, a visualization of a school bus for better recognition would be very helpful. Finally, this recent upgrade to the system should allow it to detect vehicles transitioning from stationary to in motion more accurately, thereby making better decisions when navigating the road.

Improved detection of rare objects by 18% and reduced the depth error to large trucks by 9%, primarily from migrating to more densely supervised autolabeled datasets.

Object detection and depth perception advancements are essential for a safer FSD experience. Recent improvements in these areas include a 9% reduction in depth error for large trucks and an 18% increase in the ability to detect rare objects, thanks to densely supervised Auto label data sets. In addition, with better integration of multi-camera videos, the car can more accurately perceive the location and size of large trucks, reducing the risk of collisions and helping it stay in its lane. These enhancements increase the safety of everyone on the road and inspire greater confidence in autonomous driving technology.

Crosswalk Behavior will Change

Improved decision making at crosswalks by leveraging neural network based ego trajectory estimation in place of approximated kinematic models.

Engineers have found a new way to help Teslas make better decisions when dealing with crosswalks. In the past, FSD would try to stop as soon as possible when they saw a pedestrian near the crosswalk. However, this could be a problem because sometimes the pedestrian is just standing there and not planning to cross the street. To make things better, researchers have created a new computer model that helps the car make better decisions. This model is called "neural network-based ego trajectory estimation." With this model, the vehicle can decide whether to keep going or stop based on how close the pedestrian is to the crosswalk. This way, the car won't stop too early and won't cause any problems for other vehicles.

Highway Improvements

Added a long-range highway lanes network to enable earlier response to blocked lanes and high curvature.

Tesla's new long-range Highway Lanes Network will enable the car to respond earlier to blocked lanes and high curvature situations, typically on highways and high-speed roads. In addition, it addresses the limitations of the occupancy Network, which previously allowed the car to see only a limited distance of approximately 100 meters in front of and 20 meters behind the vehicle. With the new long-range Highway Lanes Network, the car can see further ahead, allowing it to detect blocked lanes and curves much sooner, giving it more time to react.

One of the most significant advantages of the long-range Highway Lanes Network is its ability to detect and respond to high curvature situations smoothly. Currently, the car brakes late before a curve, which is not optimal for a safe driving experience. However, with the long-range Highway Lanes Network, the car can predict the angle, making the acceleration smoother and braking earlier. This results in better behavior on highways and high-speed back roads. The new long-range Highway Lanes Network will also enhance the driving experience by reducing sudden braking, making the driving experience more comfortable for the passengers.

Improved reliability and smoothness of merge control, by deprecating legacy merge region tasks in favor of merge topologies derived from vector lanes.

The recent improvement to the highway merge control system involves merging topologies derived from Vector Lanes. Vector Lanes are dedicated lanes designed to make merging more efficient and less congested. They are situated to the left of the main highway lanes, providing extra space for merging vehicles to accelerate and merge smoothly into the main traffic. Vector Lanes are typically longer than traditional merging lanes, which gives drivers more time to complete their merge. The use of Vector Lanes, in combination with the modern merged topologies, can significantly improve the performance and safety of highway systems.

Improved lane changes, including: earlier detection and handling for simultaneous lane changes, better gap selection when approaching deadlines, better integration between speed-based and nav-based lane change decisions and more differentiation between the FSD driving profiles with respect to speed lane changes.

Improving full self-driving technology means enhancing lane change capabilities with earlier detection and handling for simultaneous changes, better gap selection, and enhanced speed and navigation-based data integration. A major challenge in full self-driving is the 10-30 second gap between navigation and second-to-second data, leaving room for critical lane change decisions. The improved system positions the car better, using the best time for lane changes by combining navigation and speed-based data.

Other Enhancements

Reduced goal pose prediction error for candidate trajectory neural network by 40% and reduced runtime by 3X. This was achieved by improving the dataset using heavier and more robust offline optimization, increasing the size of this improved dataset by 4X, and implementing a better architecture and feature space.

Now we get into some more technical changes in this update. Tesla has reduced "goal pose prediction error for candidate trajectory neural network by 40% and reduced runtime by 3X." First, let's take a step back. Goal pose refers to the position where the vehicle needs to end up. The candidate trajectory is the possible paths that the car could take to get there. The "goal pose prediction error" for the "candidate trajectory neural network" is the amount of difference between where the neural network predicts the vehicle will end up and where it ends up. In other words, it measures how accurately the neural network predicts the vehicle's final position. The goal is to minimize this prediction error so the car can accurately determine the best path to reach its goal pose. Therefore, these improvements equate to more precise estimates providing a better user experience.

Improved occupancy network detections by oversampling on 180K challenging videos including rain reflections, road debris, and high curvature.

Tesla also has improved its occupancy network detections specifically for rain reflections, road debris and high curvature. The occupancy network is a computer system that uses sensors to detect and identify objects in the environment, such as other vehicles or pedestrians, and determine whether or not they occupy space on the road. The "occupancy network detections" refer to detecting and identifying these objects in real-time as the vehicle is driving. For example, after rain, the program could pick up reflections on the road from nearby signs. In some cases, this could be very rare. Therefore engineers oversampled 180,000 videos to train the program on how to react.

Added "lane guidance module and perceptual loss to the Road Edges and Lines network, improving the absolute recall of lines by 6% and the absolute recall of road edges by 7%.

Improved overall geometry and stability of lane predictions by updating the "lane guidance" module representation with information relevant to predicting crossing and oncoming lanes.

The Lane guidance module and perceptual loss to the Road Edges and Lines network are two essential components of FSD technology. The Lane guidance module is responsible for identifying the lane markings on the road. In contrast, the Perpetual loss to the road edges and lines network analyzes images to detect the edges of the road and any obstacles in the car's path. These two components have been updated to improve the total recall of lines by six percent and road edges by seven percent, reducing false observations of road edges, lane edges, and other features where they should not be detected. The Lane guidance module may apply to all driving scenarios, including city and highway driving. Improvements to the module's representation can enhance the stability of Lane predictions, particularly in complex situations like intersections.

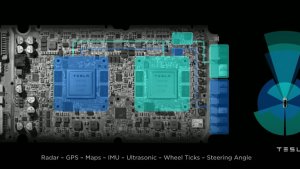

Unlocked longer fleet telemetry clips (by up to 26%) by balancing compressed IPC buffers and optimized write scheduling across twin SOCs.

Tesla made an impressive advancement in their Fleet Telemetry system by optimizing scheduling across twin SOCs and balancing compressed IPC buffers, leading to a 26% increase in telemetry data that can be sent back for analysis. IPC stands for inter-process communication, which is the communication between processes in a computer system, and SOC stands for system on a chip, a type of integrated circuit combining multiple computer components into one. In simpler terms, Tesla's improvement allows for better communication between two parallel chips in Hardware 3 and 4, which increases the amount of data they can send back for analysis. In addition, this extended telemetry timeframe from 10 to 12.5 or 13 seconds will enable Tesla to collect more contextual information, which can be useful for detecting objects earlier and avoiding potential road hazards.

_300w.png)