Breaking Down Tesla’s Autopilot vs. Wall “Wile E. Coyote” Video

Mark Rober, of glitter bomb package fame, recently released a video titled Can You Fool A Self-Driving Car? (posted below). Of course, the vehicle featured in the video was none other than a Tesla - but there’s a lot wrong with this video that we’d like to discuss.

We did some digging and let the last couple of days play out before making our case. Mark Rober’s Wile E. Coyote video is fatally flawed.

The Premise

Mark Rober wanted to prove whether or not it was possible to fool a self-driving vehicle, using various test scenarios. These included a wall painted to look like a road, low-lying fog, mannequins, hurricane-force rain, and bright beams.

All of these individual “tests” had their own issues - not least because Mark didn’t adhere to any sort of testing methodology, but because he was looking for a result - and edited his tests until he was sure of it.

Interestingly, many folks on X were quick to spot that Mark had been previously sponsored by Google to use a Pixel phone - but was using an iPhone to record within the vehicle - which he had edited to look like a Pixel phone for some reason. This, alongside other poor edits and cuts, led many, including us, to believe that Mark’s testing was edited and flawed.

Flaw 1: Autopilot, Not FSD

Let’s take a look at the first flaw. Mark tested Autopilot - not FSD. Autopilot is a driving aid for lane centering and speed control - and is not the least bit autonomous. It cannot take evasive maneuvers outside the lane it is in, but it can use the full stable of Tesla’s extensive features, including Automatic Emergency Braking, Forward Collision Warnings, Blind Spot Collision Warnings, and Lane Departure Avoidance.

On the other hand, FSD is allowed and capable of departing the lane to avoid a collision. That means that even if Autopilot tried to stop and was unable to, it would still impact whatever obstacle was in front of it - unlike FSD.

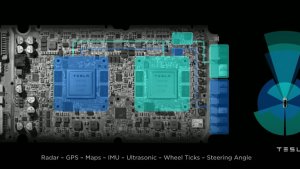

As we continue with the FSD argument - remember that Autopilot is running on a 5-year-old software stack that hasn’t seen updates. Sadly, this is the reality of Tesla not updating the Autopilot stack for quite some time. It seems likely that they’ll eventually bring a trimmed-down version of FSD to replace Autopilot, but that hasn’t happened yet.

Mark later admitted that he used Autopilot rather than FSD because “You cannot engage FSD without putting in a destination,” which is also incorrect. It is possible to engage FSD without a destination, but FSD chooses its own route. Where it goes isn’t within your control until you select a destination, but it tends to navigate through roads in a generally forward direction.

The whole situation, from not having FSD on the vehicle to not knowing you can activate FSD without a destination, suggests Mark is rather unfamiliar with FSD and likely has limited exposure to the feature.

Let’s keep in mind that FSD costs $99 for a single month, so there’s no excuse for him not using it in this video.

Flaw 2: Cancelling AP and Pushing Pedals

Many people on X also followed up with reports that Mark was pushing the pedals or pulling on the steering wheel. When you tap on the brake pedal or pull or jerk the steering wheel too much, Autopilot will disengage. For some reason, during each of his “tests,” Mark closely held the steering wheel of the vehicle.

This comes off as rather odd - at the extremely short distances he was enabling AP at, there wouldn’t be enough time for a wheel nag or takeover warning required. In addition, we can visibly see him pulling the steering wheel before “impact” in multiple tests.

Over on X, techAU breaks it down excellently on a per-test basis. Mark did not engage AP in several tests, and he potentially used the accelerator pedal during the first test - which means that Automatic Emergency Braking is overridden. In another test, Mark admitted to using the pedals.

Flaw 3: Luminar Sponsored

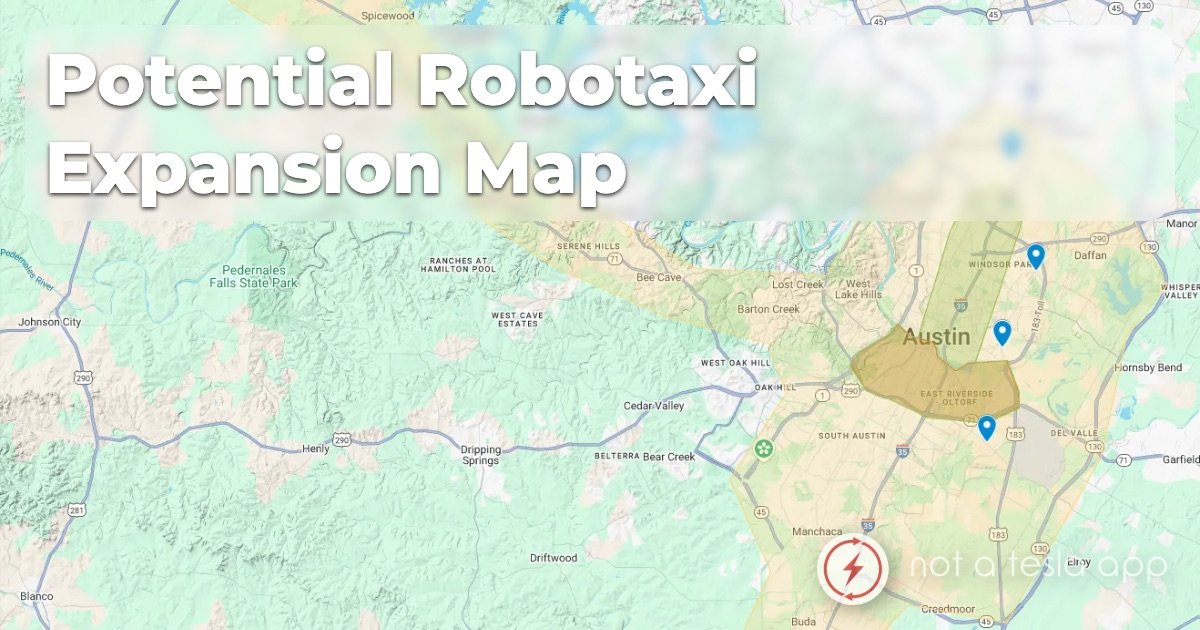

This video was potentially sponsored by a LiDAR manufacturer - Luminar. Although Mark says that this isn’t the case. Interestingly, Luminar makes LiDAR rigs for Tesla - who uses them to test ground truth accuracy for FSD. Just as interesting, Luminar’s Earnings Call was also coming up at the time of the video’s posting.

Luminar had linked the video at the top of their homepage but has since taken it down. While Mark did not admit to being sponsored by Luminar, there appear to be more distinct conflicts of interest, as Mark’s charity foundation has received donations from Luminar’s CEO.

Given the positivity of the results for Luminar, it seems that the video had been well-designed and well-timed to take advantage of the current wave of negativity against Tesla, while also driving up Luminar’s stock.

Flaw 4: Vision-based Depth Estimation

The next flaw to address is the fact that humans and machines can judge depth using vision. On X, user Abdou ran the “invisible wall” through a monocular depth estimation model (DepthAnythingV2) - one that uses a single image with a single angle. This fairly simplified model can estimate the distance and depth of items inside an image - and it was able to differentiate the fake wall from its surroundings easily.

Tesla’s FSD uses a far more advanced multi-angle, multi-image tool that stitches together and creates a 3D model of the environment around it and then analyzes the result for decision-making and prediction. Tesla’s more refined and complex model would be far more able to easily detect such an obstacle - and these innovations are far more recent than the 5-year-old Autopilot stack.

While detecting distances is more difficult in a single image, once you have multiple images, such as in a video feed, you can more easily decipher between objects and determine distances by tracking the size of each pixel as the object approaches. Essentially, if all pixels are growing at a constant rate, then that means it’s a flat object — like a wall.

Case in Point: Chinese FSD Testers

To make the case stronger - some Chinese FSD testers took to the streets and put up a semi-transparent sheet - which the vehicle refused to drive through or drive near. It would immediately attempt to maneuver away each time the test was engaged - and refused to advance with a pedestrian standing in the road.

Would FSD hit a transparent film wall?

— Aaron Li (@boolusilan) March 18, 2025

This test showed it just avoids it.

Added English subtitles.

Credit: Douyin 第一智驾 pic.twitter.com/IU8IyuRG01

Thanks to Douyin and Aaron Li for putting this together, as it makes an excellent basic example of how FSD would handle such a situation in real life.

Flaw 5: The Follow-Up Video and Interview

Following the community backlash, Mark released a video on X, hoping to resolve the community’s concerns. However, this also backfired. It turned out Mark’s second video was of an entirely different take than the one in the original video - this was at a different speed, angle, and time of initiation.

Mark then followed up with an interview with Philip DeFranco (below), where he said that there were multiple takes and that he used Autopilot because he didn’t know that FSD could be engaged without a destination. He also answered here that Luminar supposedly did not pay him for the video - even with their big showing as the “leader in LiDAR technology” throughout the video.

Putting It All Together

Overall, Mark’s video was rather duplicitous - he recorded multiple takes to get what he needed, prevented Tesla’s software from functioning properly by intervening, and used an outdated feature set that isn’t FSD - like his video is titled.

Upcoming Videos

Several other video creators are already working to replicate what Mark “tried” to test in this video.

To get a complete picture, we need to see unedited takes, even if they’re included at the end of the video. The full vehicle specifications should also be disclosed. Additionally, the test should be conducted using Tesla’s latest hardware and software—specifically, an HW4 vehicle running FSD v13.2.8.

In Mark’s video, Autopilot was engaged just seconds before impact. However, for a proper evaluation, FSD should be activated much earlier, allowing it time to react and, if capable, stop before hitting the wall.

A wave of new videos is likely on the way—stay tuned, and we’ll be sure to cover the best ones.